Chat, Kratt, and RAG

A software engineer offers tips on how to make AI work harder for you.

At the end of the last century and the beginning of this one, phone directories were popular everywhere. In Estonia, they were advertised to businesses with the slogan, “If you’re not here, you don’t exist.”

Updating that slogan for today’s world might be: “’If you don’t build a RAG, you’ll miss the IT train and have to catch up by taxi.”

With the advent of AI based on large language models, building RAGs (Retrieval Augmented Generation) quickly became popular. These models have nuances that an average user might not immediately grasp.

As an example of a language model, I offer the GPT model created by OpenAI, commonly known as ChatGPT. But I like to use the word kratt.

Chat ↔︎ Kratt 😊

In Estonian folklore, a kratt is a magical creature created to serve its master and gather wealth.

The GPT kratt is a “virtual thinking machine” that, based on the text it receives (the prompt), generates the most likely next sentences or words to respond with (completion).

The kratt has no memory, so it lacks any biases from the past. It doesn’t even remember the previous response it sent you just moments ago, so if something goes wrong, it won’t hold a grudge for long 😊 For a smooth conversation with the kratt, it’s important that the kratt can remember at least most of the content of the discussion. There’s a simple, though initially somewhat unusual, technique for this: during the conversation, it’s helpful to constantly remind the kratt of what has been discussed earlier. The kratt has what’s called a context window, where the previous conversation can be stored. The larger this window, the more previous information it can hold, ensuring that the thread of the conversation doesn’t get lost. You can also add other important information to the context window, such as an explanation that the Estonian word palk, in this context, means “a piece of a tree trunk,” and not “salary.”

Additionally, it’s important to consider that the kratt is temporally frozen – it responds to questions based on what seemed most relevant at the time it was created. However, you can add updated information to the context window, such as “Estonia won the 2024 European Football Championship.” If you add this information and later ask the kratt who won the 2024 Championship, it will respond that Estonia did 😉

“Kratt whispering” or prompt engineering

This is a technique for communicating with the kratt, is actually taught in workshops with names like “Successful Manifestation,” “Goal Setting and Clear Formulation,” “Advanced Role Playing,” and so on 😊

Basically, you need to tell the kratt exactly what you want and in what role the kratt should respond to you. This role part is fascinating 😉 That’s why, for example, I start my GPT kratt whispering with the words: “You are…”

So, if I wanted the kratt to respond to me like a “rude pirate,” I would just need to mention that.

Prompt: You’re a fearsome, rude pirate. What drink do you prefer?

Answer: Arrrg, of course, it’s strong rum, and none of that weak swill! It’s the drink that puts hair on your chest and makes a pirate feel like the true king of the seas! If you see me drinking, it’s always rum, burning like hellfire itself and tasting like revenge! Arrrg, that’s my drink, and you’d best keep your hands off, or I’ll show you why they call me fearsome and rude!

You need to express yourself clearly, unambiguously, and based on the created context. When communicating with a kratt, you must keep in mind that you’ll get what you ask for 😉

For example, if you ask, “How can I achieve financial freedom?”

You might expect an answer like: “Financial freedom is a state where you have enough financial resources to live the lifestyle you desire without worrying about daily expenses. To achieve this…”

But you could also get a response like: “Let yourself be stripped of all finances or give them away. That’s how you’ll achieve financial freedom.”

The token

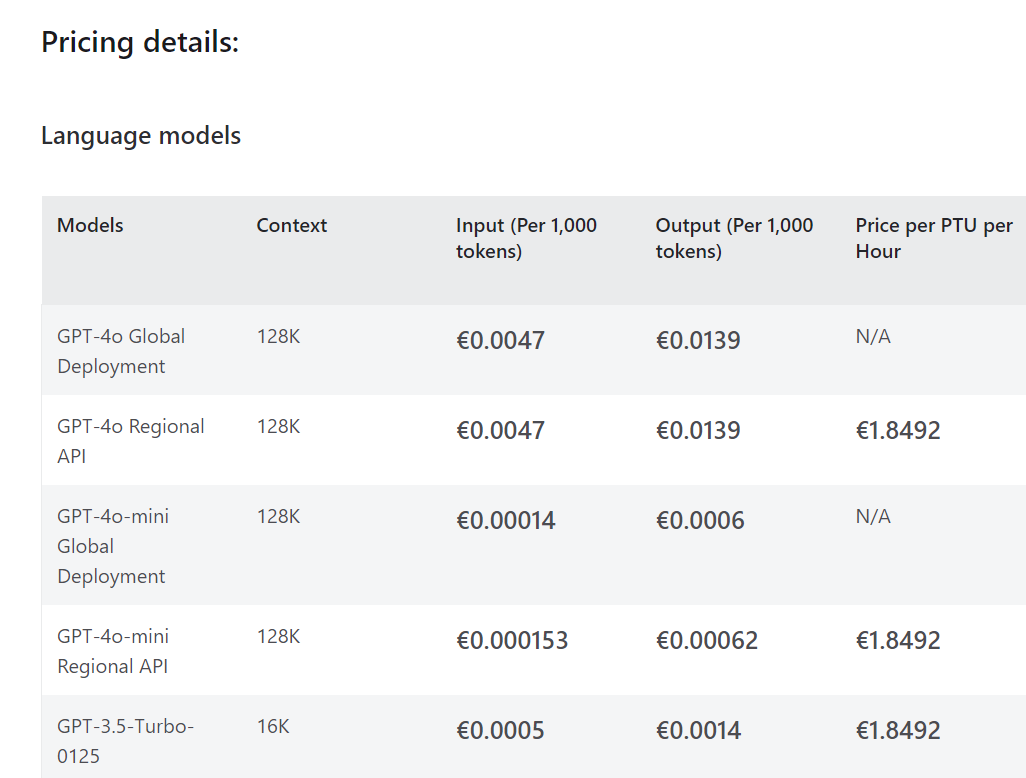

The basic unit for using a kratt is the token. Tokens are what kratt service providers charge you for. In Estonian grammar, one token roughly corresponds to one syllable in a word. When communicating with a kratt, if a paid model is in use, you pay for both the question (input tokens) and the response (output tokens). The saying in Estonian “meil lobisemise eest palka ei maksta” (“We don’t get paid for chit-chat”), becomes especially relevant in this context. A prompt needs to be concise and to the point.

In the table above, there is an excerpt from the current pricing list of Microsoft Azure cloud service OpenAI LLM models.

So, how can you keep costs under control when conversing with a kratt? Here are a few techniques:

- One technique is to take a “basic kratt” and train it to become a “biased kratt,” tailored to your needs. This technique is called fine-tuning. Parents, by the way, do this every day. They add their own information patterns and behavior patterns to a child’s basic personality.

- Another technique is to find shortcuts. Instead of providing lengthy explanations of context, you can find a magical short phrase. Remember the roles? 😉 You only had to tell the kratt to act like a rude pirate. I didn’t need to explain how a rude pirate should behave — the kratt already drew that from its “consciousness,” aligning with my desired vision of a rude pirate.

- The third option is to somehow avoid resending the entire lengthy conversation each time. The goal is to keep the kratt’s context window as small but relevant as possible. If you previously talked to the kratt about “the garden” and are now discussing “the hole,” there’s no need to keep re-sending the garden-related conversation each time.

Now back to RAG

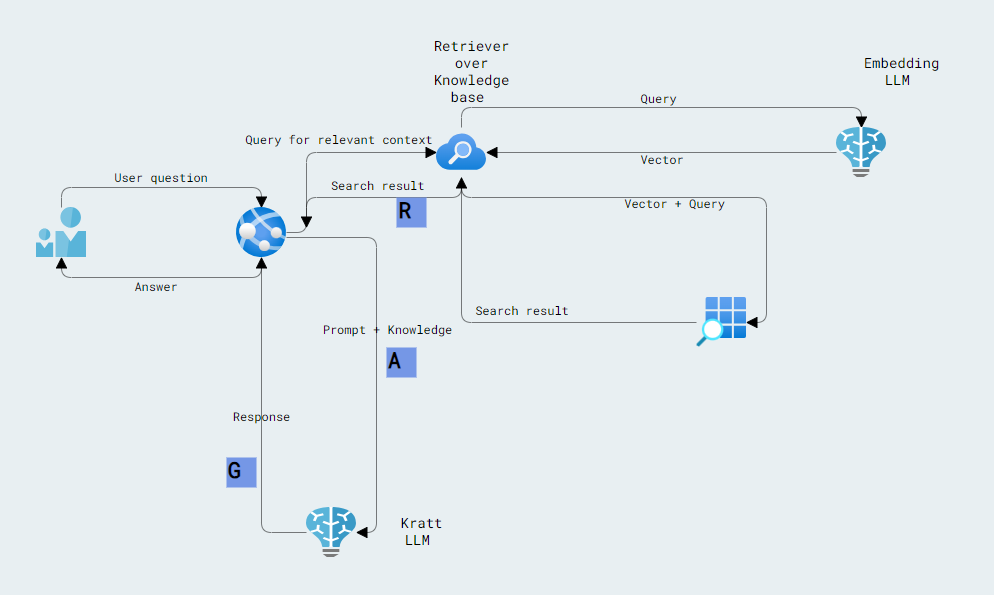

To keep a conversation with kratt relevant, up-to-date, and context-based – RAG comes into play.

RAG is essentially an architecture that enhances the capabilities of the kratt. It’s like a kratt on steroids.

The key components, in addition to the large language model (LLM), are a knowledge base and a search engine. There may also be automatic tools that try to improve a user’s poorly phrased prompt and create the necessary context.

What does this achieve?

- The kratt gains real-time knowledge that it didn’t have at the time of its creation.

- The kratt can use information that isn’t available on the internet – such as business secrets or other confidential data.

The prompt becomes more effective and cost-efficient. It’s as if the user is having a specialist rephrase the question to make it clearer and more understandable.

LLMOps

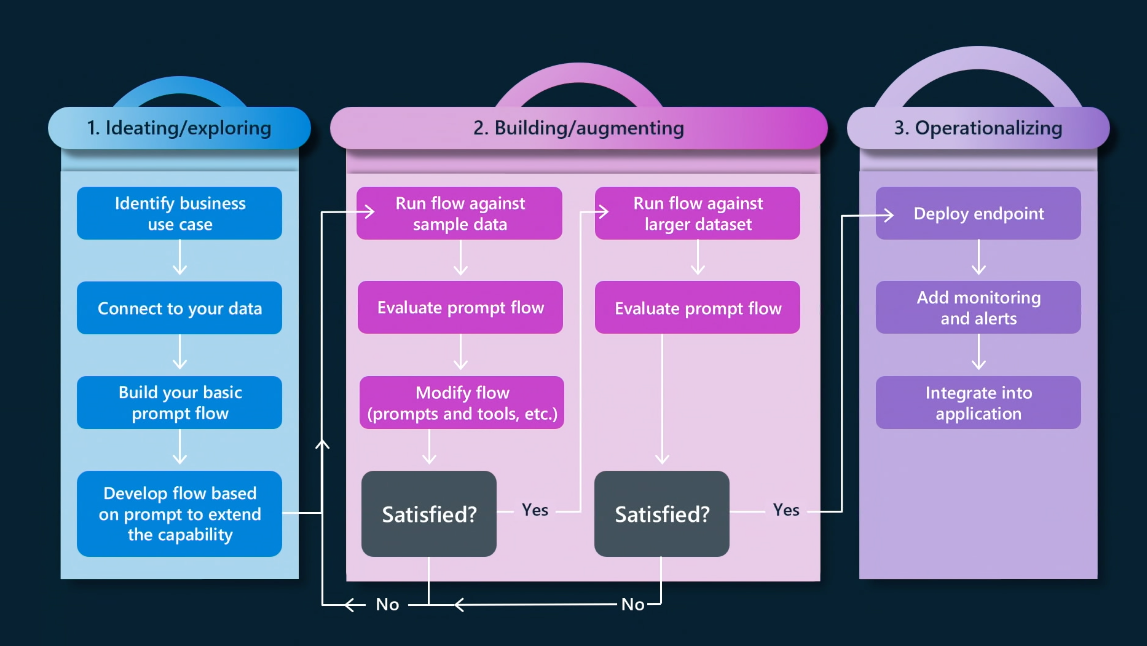

In the world of AI, the focus now moves from MLOps (workflow for building ML apps) to LLMOps (workflow for building generative AI apps), starting with prompt engineering, a process where we refine the inputs to the LLM (prompts) through a process of trial and error (build-run-evaluate) until the responses meet our quality, cost, and performance requirements.

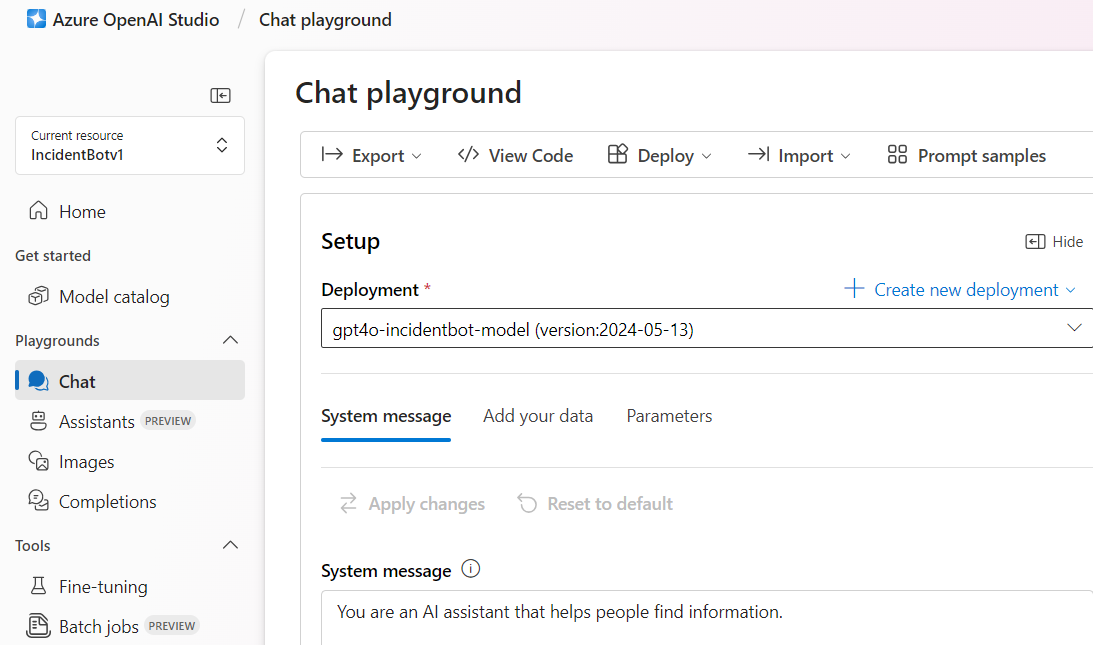

In the Azure cloud environment, there are excellent tools available for this workflow, Azure AI Studio or Azure OpenAI Studio.

In the world of kratt, everything changes rapidly. Every day, new technologies emerge that simplify processes but also bring new challenges. For instance, between the time I wrote this article and the time it was published, I’ve encountered innovations like a “graph prompt” and a “built-in prompt cache.” Software development itself is also evolving, shifting from strictly programming language-based development to a process akin to LLMOps. We are indeed living in an interesting and fast-changing era, where each day brings something new and unexpected.

About Proekspert

Proekspert is a skilled software development company with over 30 years of experience. We have encountered many diverse approaches to equipment, software engineering, and cybersecurity. Our expertise covers embedded software, device-cloud integrations, technician apps, and portals.